Hello World as a Tested System

Here is the famous Hello World program in Python.

print("Hello Python world!")It is impressive how many languages this has been written in. See an interesting site here on github that shows over 1000 versions of it.

This can be used as the first step someone might take into learning a programming language. Or it could also be used to run and make sure one's programming environment is working correctly.

It could be run simply like this:

> python hello_world.py

Hello Python world!Nothing could be simpler. Hardly belongs on a blog site about tackling complexity.

(note, in order to handle the length of some of the code sections, those sections are all individually scrollable and most have tabs)

Perhaps it's interesting to look at this simple program through the lens of tackling complexity. Are we making a mountain out of a molehill? Perhaps. Perhaps not. Let's do it anyway.

A question one could rightfully ask is, "How do I test hello world?". How far down the rabbit hole might this question lead us and what we might learn from it. It is also surprising what we find might apply more broadly to software development, systems development etc.

Is it even worth it to investigate the testing of a "Hello world" program (and its slightly modified variants)? Again, perhaps. Perhaps not. Let's see what's there.

One way to test it is, of course, to run it as above and look at the screen output ourselves like we did above. Or run it in a "test case" and, again, check the screen output (remembering to include the -s command line argument to pytest of course, to prevent pytest from swallowing up the output)

A test case could look like this:

def test_hello_world():

print("Hello Python world!")and run with pytest:

%> pytest -s test_hello_world_simple.pyWhich gives us this result:

============= 1 passed in 0.01s =============

% pytest -s test_hello_world_simple.py

============= test session starts =============

platform darwin -- Python 3.12.11, pytest-8.4.1, pluggy-1.6.0

rootdir: ./

configfile: pyproject.toml

plugins: langsmith-0.4.29, cov-6.3.0, anyio-4.10.0, dash-3.2.0, mock-3.14.1

collected 1 item

test_hello_world_simple.py Hello Python world!

.

============== 1 passed in 0.01s ===============But we aren't really testing it, we're just running it from pytest.

What do we want to test? The output of the print statement.

There are a number of ways to test this programmatically (i.e. without using your eyes to verify the output):

def test_hello_world(mocker):

mock_print = mocker.patch("builtins.print")

print("Hello Python world!")

mock_print.assert_called_once_with("Hello Python world!")Which can be run with pytest:

============= test session starts =============

platform darwin -- Python 3.12.11, pytest-8.4.1, pluggy-1.6.0

rootdir: ./

configfile: pyproject.toml

plugins: langsmith-0.4.29, cov-6.3.0, anyio-4.10.0, dash-3.2.0, mock-3.14.1

collected 1 item

test_hello_world.py . [100%]

========== 1 passed in 0.01s ============and if there is an error (let's say there is a missing exclamation point)

def test_hello_world(mocker):

mock_print = mocker.patch("builtins.print")

print("Hello Python world")

mock_print.assert_called_once_with("Hello Python world!")We get an error (note: this and all other entries are vertically and horizontally scrollable and some have tabs in them for multiple related listings and outputs):

=================================== FAILURES ===================================

_______________________________ test_hello_world _______________________________

self = <MagicMock name='print' id='4400075728'>, args = ('Hello Python world!',)

kwargs = {}, expected = call('Hello Python world!')

actual = call('Hello Python world')

_error_message = <function NonCallableMock.assert_called_with.<locals>._error_message at 0x106460ae0>

cause = None

def assert_called_with(self, /, *args, **kwargs):

"""assert that the last call was made with the specified arguments.

Raises an AssertionError if the args and keyword args passed in are

different to the last call to the mock."""

if self.call_args is None:

expected = self._format_mock_call_signature(args, kwargs)

actual = 'not called.'

error_message = ('expected call not found.\nExpected: %s\n Actual: %s'

% (expected, actual))

raise AssertionError(error_message)

def _error_message():

msg = self._format_mock_failure_message(args, kwargs)

return msg

expected = self._call_matcher(_Call((args, kwargs), two=True))

actual = self._call_matcher(self.call_args)

if actual != expected:

cause = expected if isinstance(expected, Exception) else None

> raise AssertionError(_error_message()) from cause

E AssertionError: expected call not found.

E Expected: print('Hello Python world!')

E Actual: print('Hello Python world')

/opt/homebrew/Cellar/python@3.12/3.12.11/Frameworks/Python.framework/Versions/3.12/lib/python3.12/unittest/mock.py:949: AssertionError

During handling of the above exception, another exception occurred:

self = <MagicMock name='print' id='4400075728'>, args = ('Hello Python world!',)

kwargs = {}

def assert_called_once_with(self, /, *args, **kwargs):

"""assert that the mock was called exactly once and that that call was

with the specified arguments."""

if not self.call_count == 1:

msg = ("Expected '%s' to be called once. Called %s times.%s"

% (self._mock_name or 'mock',

self.call_count,

self._calls_repr()))

raise AssertionError(msg)

> return self.assert_called_with(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

E AssertionError: expected call not found.

E Expected: print('Hello Python world!')

E Actual: print('Hello Python world')

E

E pytest introspection follows:

E

E Args:

E assert ('Hello Python world',) == ('Hello Python world!',)

E

E At index 0 diff: 'Hello Python world' != 'Hello Python world!'

E Use -v to get more diff

/opt/homebrew/Cellar/python@3.12/3.12.11/Frameworks/Python.framework/Versions/3.12/lib/python3.12/unittest/mock.py:961: AssertionError

During handling of the above exception, another exception occurred:

mocker = <pytest_mock.plugin.MockerFixture object at 0x10643e840>

def test_hello_world(mocker):

mock_print = mocker.patch("builtins.print")

print("Hello Python world")

> mock_print.assert_called_once_with("Hello Python world!")

E AssertionError: expected call not found.

E Expected: print('Hello Python world!')

E Actual: print('Hello Python world')

E

E pytest introspection follows:

E

E Args:

E assert ('Hello Python world',) == ('Hello Python world!',)

E

E At index 0 diff: 'Hello Python world' != 'Hello Python world!'

E Use -v to get more diff

test_hello_world.py:4: AssertionError

=========================== short test summary info ============================

FAILED test_hello_world.py::test_hello_world - AssertionError: expected call not found.

============================== 1 failed in 0.09s ===============================Pretty noisy output. Really all you need to see are the following lines from the error message:

E Expected: print('Hello Python world!')

E Actual: print('Hello Python world')We can also run tests from a bash shell (using Bash Automated Test System, BATS) which might be considered to be an integration, end-to-end, or even a user acceptance test. Most of the remaining code segments have tabs to show the different aspects such as:

content1content2content3# hello.py - The program to test

def hello_world():

print("Hello Python world!")

if __name__ == "__main__":

hello_world()# test_hello.bats

#!/usr/bin/env bats

@test "hello.py prints correct message" {

run python hello.py

[ "$status" -eq 0 ]

[ "$output" = "Hello Python world!" ]

}bats test.bats

test.bats

✓ hello.py prints correct message

1 test, 0 failures

Let's modify the hello world program in a few small ways:

- create a function and move the print statement there

- add a parameter to the function so we can change the greeting beginning ("Hello", "Hallo", etc.)

- add a return statement letting us know all went well in the function

def greet(hello):

print(f"{hello} Python world!")

return TrueAdd function with an input (hello parameter) and an output (return statement)

Next we can change the test.

def test_hello_world():

assert greet('Hello')And this test passes. But we still here haven't verified the output.

Let's make another change to accept user input so it says the greeting with the particular user's name.

def greet(hello):

name = input("Please enter your name: ")

print(f"{hello} {name}!")

return True

greet("Hello")

% python hello_direct_input.py

Please enter your name: Dave

Hello Dave!

% a test case could look like:

def test_hello_world():

assert greet('Hello')===========test session starts ==========

platform darwin -- Python 3.12.11, pytest-8.4.1, pluggy-1.6.0

rootdir: ./

configfile: pyproject.toml

plugins: langsmith-0.4.29, cov-6.3.0, anyio-4.10.0, dash-3.2.0, mock-3.14.1

collected 1 item

test_hello_world_simple3.py Please enter your name: Dave

Hello Dave!

============= 1 passed in 4.14s ============

% The test hangs/blocks when the program prompts the user: Please enter your name:.

Let's look at this function again from a slightly different perspective. Here is the function again.

def greet(hello):

name = input("Please enter your name: ")

print(f"{hello} {name}!")

return True

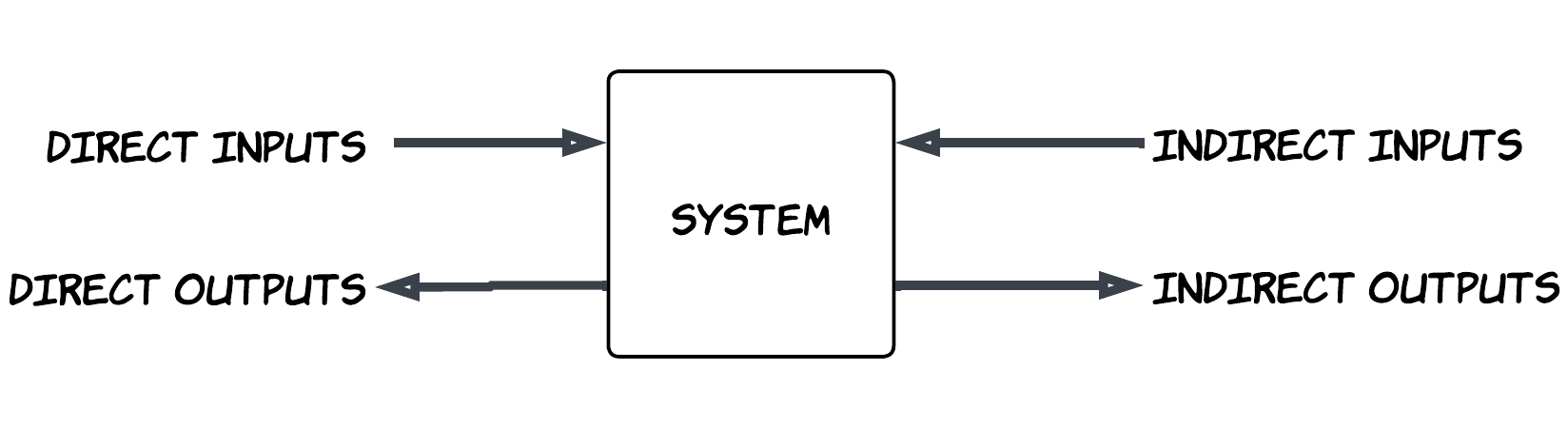

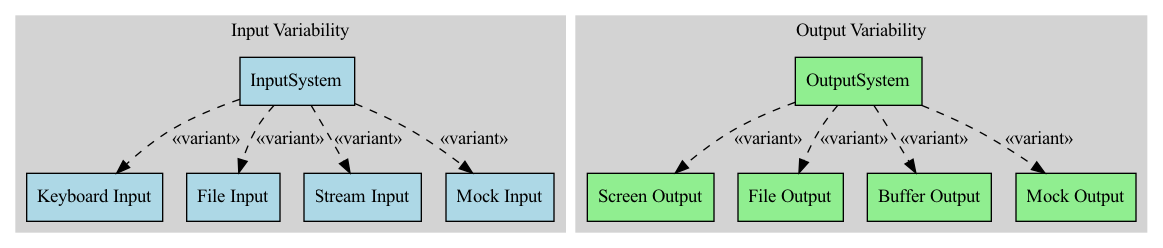

We could view this function in terms of inputs and outputs. It has two inputs:

- The hello parameter

- The user-entered name

It has two outputs

- Greeting message that goes to the screen

- The return value of the function

We could further differentiate these in terms of how easy they are to control/verify. We can list these inputs/outputs as either direct or indirect. Direct implies we can control it or easily gain access to it to verify it. Indirect would imply that this control or access is not as directly accomplished.

Two inputs:

- The hello parameter (direct)

- The user-entered name (indirect)

Two outputs:

- Greeting message that goes to the screen (indirect)

- The return value of the function (direct)

We can revisit the question we asked earlier: "How do I test this program?"

When we ran our test case, we controlled the greeting beginning word ("Hello") and verified the return value of the function (True). We had to deal with the indirect input and indirect output (i.e. enter our name via the keyboard and look at the screen to make sure the proper message came out). When we run our test case it even blocks on the input part and waits for us to enter the name.

Perhaps there is a different way to handle this where we can take control of all the inputs and outputs and use them in our test case. What if we did something like this:

def test_patch_multiple_functions(mocker):

mock_print = mocker.patch('builtins.print')

mock_input = mocker.patch('builtins.input', return_value="Dave")

assert greet("Hello")

mock_input.assert_called_once_with("Please enter your name: ")

mock_print.assert_called_once_with("Hello Dave!")=========== 1 passed in 0.01s ==============

% pytest -s test_hello_world_simple3.py

============================= test session starts ==============================

platform darwin -- Python 3.12.11, pytest-8.4.1, pluggy-1.6.0

rootdir: ./

configfile: pyproject.toml

plugins: langsmith-0.4.29, cov-6.3.0, anyio-4.10.0, dash-3.2.0, mock-3.14.1

collected 1 item

test_hello_world_simple3.py .

=============== 1 passed in 0.01s ==============

% This certainly seems to follow the tried-and-true Arrange-Act-Assert and Given-When-Then patterns.

Right off the bat, it is easier to run in that the program does not block/hang, waiting for a real user's input. But further it does not require us to view the screen output to verify the program worked. All of these are done in the test cases. In the context of the types of inputs and outputs, we can have control over all direct and indirect inputs and can assert and verify the direct and indirect outputs.

We are using the mocking capabilities of pytest in this case. This is not the only way to do it but it works well for our purposes.

As is the case with so many software solutions, there are many ways to do the same thing. Courtesy of Claude.ai, here are 10 different ways to automatically capture the output of a print statement from within a python test case.

# ===== METHOD 1: Using pytest's capsys fixture =====

def test_with_capsys(capsys):

"""Test using pytest's capsys fixture to capture stdout"""

hello_world()

captured = capsys.readouterr()

assert captured.out == "Hello Python world!\n"

assert captured.err == ""# ===== METHOD 2: Using unittest.mock to patch print =====

def test_with_mock_print():

"""Test by mocking the print function itself"""

with patch('builtins.print') as mock_print:

hello_world()

mock_print.assert_called_once_with("Hello Python world!")# ===== METHOD 3: Using unittest.mock to patch sys.stdout =====

def test_with_mock_stdout():

"""Test by mocking sys.stdout"""

mock_stdout = MagicMock()

with patch('sys.stdout', mock_stdout):

hello_world()

mock_stdout.write.assert_any_call("Hello Python world!")# ===== METHOD 4: Using subprocess to run as separate process =====

def test_with_subprocess():

"""Test by running the script as a subprocess"""

result = subprocess.run(

[sys.executable, '-c', 'print("Hello Python world!")'],

capture_output=True,

text=True

)

assert result.stdout == "Hello Python world!\n"

assert result.returncode == 0

def test_with_subprocess_file():

"""Test by running the actual hello.py file as subprocess"""

result = subprocess.run(

[sys.executable, 'hello.py'],

capture_output=True,

text=True

)

assert result.stdout == "Hello Python world!\n"

assert result.returncode == 0# ===== METHOD 5: Using contextlib.redirect_stdout =====

def test_with_redirect_stdout():

"""Test using contextlib to redirect stdout to StringIO"""

f = io.StringIO()

with redirect_stdout(f):

hello_world()

output = f.getvalue()

assert output == "Hello Python world!\n"# ===== METHOD 6: Manually replacing sys.stdout =====

def test_with_stringio():

"""Test by manually replacing sys.stdout with StringIO"""

old_stdout = sys.stdout

sys.stdout = io.StringIO()

try:

hello_world()

output = sys.stdout.getvalue()

assert output == "Hello Python world!\n"

finally:

sys.stdout = old_stdout# ===== METHOD 7: Using pytest's capfd (captures file descriptors) =====

def test_with_capfd(capfd):

"""Test using pytest's capfd fixture (lower level than capsys)"""

hello_world()

captured = capfd.readouterr()

assert captured.out == "Hello Python world!\n"# ===== METHOD 8: Using unittest.TestCase =====

import unittest

class TestHelloWorld(unittest.TestCase):

def test_with_unittest(self):

"""Test using unittest framework with StringIO"""

captured_output = io.StringIO()

sys.stdout = captured_output

try:

hello_world()

self.assertEqual(captured_output.getvalue(), "Hello Python world!\n")

finally:

sys.stdout = sys.__stdout__# ===== METHOD 9: Using doctest =====

def hello_world_with_doctest():

"""

Print hello world message.

This is the awkward way to test print with doctest:

>>> import io, sys

>>> old_stdout = sys.stdout

>>> sys.stdout = io.StringIO()

>>> hello_world_with_doctest()

>>> output = sys.stdout.getvalue()

>>> sys.stdout = old_stdout

>>> output

'Hello Python world!\\n'

"""

print("Hello Python world!")

# ===== METHOD 10: Better doctest approach - test a function that returns =====

def get_hello_message():

"""

Get hello world message.

>>> get_hello_message()

'Hello Python world!'

"""

return "Hello Python world!"pytest print_test_methods.py --doctest-modules -v

============================= test session starts ==============================

print_test_methods.py::print_test_methods.add_numbers PASSED [ 8%]

print_test_methods.py::print_test_methods.get_hello_message PASSED [ 16%]

print_test_methods.py::print_test_methods.hello_world_with_doctest PASSED [ 25%]

print_test_methods.py::test_with_capsys PASSED [ 33%]

print_test_methods.py::test_with_mock_print PASSED [ 41%]

print_test_methods.py::test_with_mock_stdout PASSED [ 50%]

print_test_methods.py::test_with_subprocess PASSED [ 58%]

print_test_methods.py::test_with_subprocess_file PASSED [ 66%]

print_test_methods.py::test_with_redirect_stdout PASSED [ 75%]

print_test_methods.py::test_with_stringio PASSED [ 83%]

print_test_methods.py::test_with_capfd PASSED [ 91%]

print_test_methods.py::TestHelloWorld::test_with_unittest PASSED [100%]

============================== 12 passed in 0.08s ==============================

%

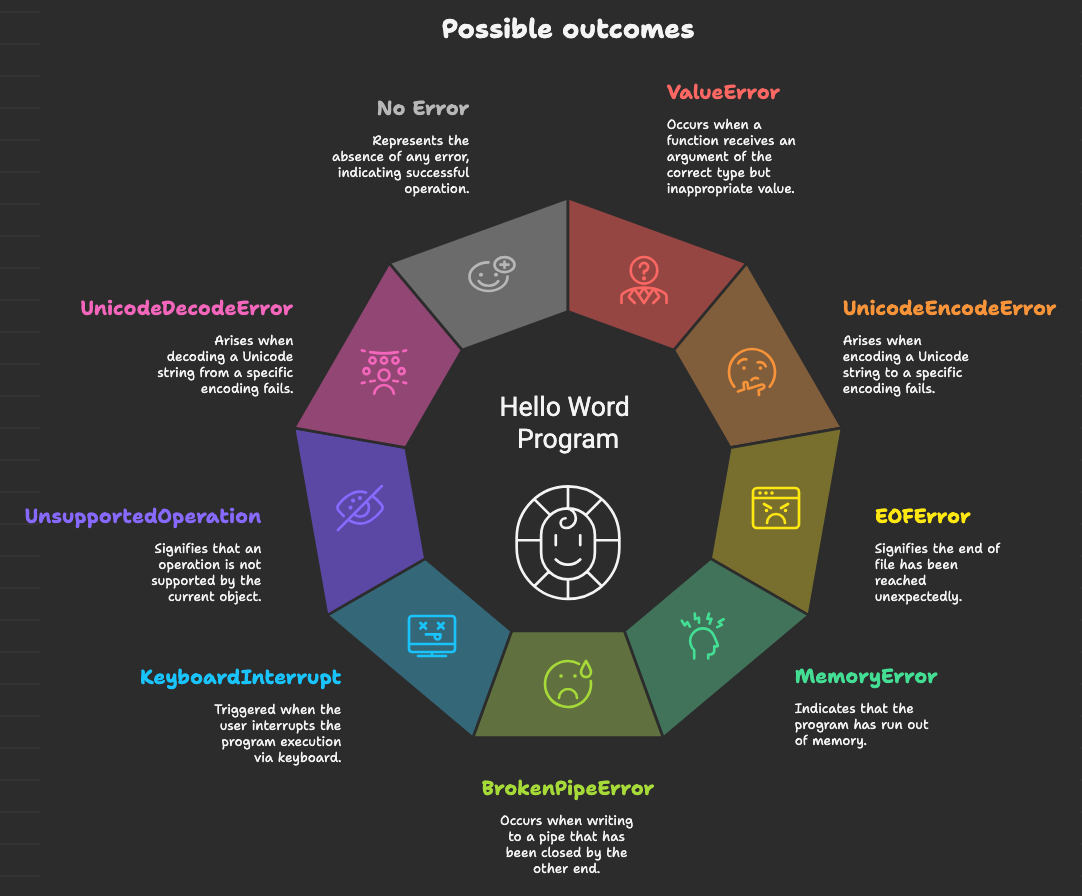

Now another question could be:

"What could possibly go wrong?"

... and of course

"How would we test that?"

And finally,

"What are we supposed to do when that 'something(s)' goes wrong and how can we verify that we have done those actions correctly?"

Lot's of questions to ask. These are not the typical questions we ask when creating and running such a simple a "Hello world" program. But we can still ask and the answers are surprisingly applicable and relevant more widely that we may have thought.

More specifically now, we could ask, "Could anything go wrong with the input call?" How about the print call? How would we test these and what do we do in the case that they do not function as we expect?

Most current python implementations that are widely used, contain input and print functions from the builtins module that can raise exceptions.

Here is an example how to cause the print statement to raise a BrokenPipeError:

for i in range(100000):

# Print a long line to fill buffer faster

# Eventually the buffer fills up and print() internally flushes

# That internal flush inside print() raises BrokenPipeError

print(f"Line {i}: " + "X" * 10000) % python broken_method2.py | head -n 1

Line 0: XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

broken_method2.py", line 6, in

print(f"Line {i}: " + "X" * 10000)

BrokenPipeError: [Errno 32] Broken pipe

Exception ignored in: <_io.TextIOWrapper name='' mode='w' encoding='utf-8'>

BrokenPipeError: [Errno 32] Broken pipe

This program, when run in a particular way (piping it to head -n 1, is capable of making the print function generate a `BrokenPipeError`exception which bubbles up all the way to the user because it is not dealt with in the program.

Here's another. This may seem a bit contrived but yet it is possible and certainly I've seen worse.

import io

# Create a file/stream that only accepts ASCII

ascii_stream = io.TextIOWrapper(

io.BytesIO(),

encoding='ascii',

errors='strict' # Will raise error on non-ASCII chars

)

print("Hello World 你好 مرحبا", file=ascii_stream)

ascii_stream.flush()

% python test_unicode_error.py

Traceback (most recent call last):

File "test_unicode_error.py", line 10, in

print("Hello World 你好 مرحبا", file=ascii_stream)

UnicodeEncodeError: 'ascii' codec can't encode characters in position 12-13: ordinal not in range(128)

% One could also imagine perhaps the system ran out of memory for some reason (similar to a disk full scenario for a file system write). I have seen this happen in the real world where an entire financial system stopped functioning due to a full disk.

# Uncomment at your own risk:

# try:

# print("x" * (10**10)) # 10 billion characters

# except MemoryError as e:

# print(f"Caught MemoryError: {e}")A memory error is not as easy to induce as the others but may be just as probable or improbable but possible. Even more reason to investigate different ways to induce these exceptions in a way that seems real to the program but not necessarily happening for real. Almost reminds us of the question Morpheus asked in the Matrix movie: "Morpheus: What is real? How do you define 'real'? If you're talking about what you can feel, what you can smell, what you can taste and see, then 'real' is simply electrical signals interpreted by your brain." (ref )

Here's another that causes a ValueError:

import io

file_stream = io.StringIO()

file_stream.close()

print("Hello World", file=file_stream)% python test_closed_file.py

Traceback (most recent call last):

File "test_closed_file.py", line 5, in

print("Hello World", file=file_stream)

ValueError: I/O operation on closed file

% Some are not exactly applicable to our hello world since the call is our call is made with one string parameter. But it is good to know what sorts of exceptions can be raised in the various uses of the print function. Then again, let's put on the hat of someone who is modifying and evolving the hello world program, perhaps also passing in the file which could be stdout but also could be something else and thus introduce more possibilities and probabilities of exceptions.

I think we made the point regarding possible errors/exceptions that can come from the print call.

What about the input call? What types of exceptions might be raised by that function call?

Here's one.

user_input = input("Enter something: ")% python eof_input.py < /dev/null

Enter something: Traceback (most recent call last):

File "eof_input.py", line 1, in

user_input = input("Enter something: ")

^^^^^^^^^^^^^^^^^^^^^^^^^^

EOFError: EOF when reading a line

% And two others:

#run and use ctrl-C after prompt

user_input = input("Enter something: ")(\% python eof_input.py

Enter something: ^CTraceback (most recent call last):

File "eof_input.py", line 1, in

user_input = input("Enter something: ")

^^^^^^^^^^^^^^^^^^^^^^^^^^

KeyboardInterrupt

% import sys

sys.stdin.close()

user_input = input("This will fail: ")% python runtime_error_input.py

This will fail: Traceback (most recent call last):

File "runtime_error_input.py", line 4, in

user_input = input("This will fail: ")

^^^^^^^^^^^^^^^^^^^^^^^^^

ValueError: I/O operation on closed file.

% An even two more for theinputfunction:

import os

import sys

original_stdin = sys.stdin

with open('/dev/null', 'w') as f:

sys.stdin = f

user_input = input("This should fail: ")% python os_error_input.py

This should fail: Traceback (most recent call last):

File "/chapter_01/tests/os_error_input.py", line 8, in

user_input = input("This should fail: ")

^^^^^^^^^^^^^^^^^^^^^^^^^^^

io.UnsupportedOperation: not readable

% import sys

import io

original_stdin = sys.stdin

invalid_bytes = b'\xff\xfe'

sys.stdin = io.TextIOWrapper(

io.BytesIO(invalid_bytes + b'\n'),

encoding='utf-8',

errors='strict'

)

user_input = input("This might fail: ")% python decode_error.py

This might fail: Traceback (most recent call last):

File "decode_error.py", line 13, in

user_input = input("This might fail: ")

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "", line 322, in decode

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xff in position 0: invalid start byte

% It is interesting that exceptions can come from how we call it a function, what happens elsewhere in our python program,, how we run it or how it is used (e.g. piping to head 1 for a print program, or taking input from /dev/nullor typing ctrl-C). Some of these can be particularly surprising because you don't always control some of the external aspects but should you should know about how the program could be used and thus make the program behave gracefully in the presence of these circumstances.

Some information could even come from externally provided configuration information perhaps maintained and modified by others and yet processed by our program (perhaps the encoding scheme on input and output streams)

Enough already eh? I think, again, we have made our point with regard to possible exceptions emanating from both input and print and the environment in which the program should behave well.

One last point: From the perspective of the greet function, we are mostly concerned that the exception/error condition can occur, not so much how they were induced.

Now that we know, demonstrably, that these errors can occur, we can approach now how to test the hello world program and what to do in the presence of these exceptions and how to verify that we are doing the right thing?

Again, here is the program:

def greet(hello):

name = input("Please enter your name: ")

print(f"{hello} {name}!")

return TrueSo, how might we test some of these exceptional circumstances. Recall, the way we tested the normal (successful) path through the code was with the following test case.

def test_patch_multiple_functions(mocker):

mock_print = mocker.patch('builtins.print')

mock_input = mocker.patch('builtins.input', return_value="Dave")

assert greet("Hello")

mock_input.assert_called_once_with("Please enter your name: ")

mock_print.assert_called_once_with("Hello Dave!")=========== 1 passed in 0.01s ==============

% pytest -s test_hello_world_simple3.py

============================= test session starts ==============================

platform darwin -- Python 3.12.11, pytest-8.4.1, pluggy-1.6.0

rootdir: ./

configfile: pyproject.toml

plugins: langsmith-0.4.29, cov-6.3.0, anyio-4.10.0, dash-3.2.0, mock-3.14.1

collected 1 item

test_hello_world_simple3.py .

=============== 1 passed in 0.01s ==============

% Not only can these mocks be used to inject data from dependencies and capture outputs into dependencies they can also be used to raise exceptions from dependencies and capture actions and outputs from the error handling code.

def greet(hello):

name = input("Please enter your name: ")

print(f"{hello} {name}!")

def test_input_eof_error(mocker):

"""Test EOFError from input (Ctrl+D on Unix, Ctrl+Z on Windows)."""

mocker.patch('builtins.input', side_effect=EOFError)

greet("Hello")% pytest test_eof_input.py

============================= test session starts ==============================

platform darwin -- Python 3.12.11, pytest-8.4.1, pluggy-1.6.0

rootdir: ./

configfile: pyproject.toml

plugins: langsmith-0.4.29, cov-6.3.0, anyio-4.10.0, dash-3.2.0, mock-3.14.1

collected 1 item

test_eof_input.py F [100%]

=================================== FAILURES ===================================

_____________________________ test_input_eof_error _____________________________

mocker =

def test_input_eof_error(mocker):

"""Test EOFError from input (Ctrl+D on Unix, Ctrl+Z on Windows)."""

mocker.patch('builtins.input', side_effect=EOFError)

> greet("Hello")

test_eof_input.py:8:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

test_eof_input.py:2: in greet

name = input("Please enter your name: ")

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

/opt/homebrew/Cellar/python@3.12/3.12.11/Frameworks/Python.framework/Versions/3.12/lib/python3.12/unittest/mock.py:1139: in __call__

return self._mock_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

/opt/homebrew/Cellar/python@3.12/3.12.11/Frameworks/Python.framework/Versions/3.12/lib/python3.12/unittest/mock.py:1143: in _mock_call

return self._execute_mock_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self =

args = ('Please enter your name: ',), kwargs = {}, effect =

def _execute_mock_call(self, /, *args, **kwargs):

# separate from _increment_mock_call so that awaited functions are

# executed separately from their call, also AsyncMock overrides this method

effect = self.side_effect

if effect is not None:

if _is_exception(effect):

> raise effect

E EOFError

/opt/homebrew/Cellar/python@3.12/3.12.11/Frameworks/Python.framework/Versions/3.12/lib/python3.12/unittest/mock.py:1198: EOFError

=========================== short test summary info ============================

FAILED test_eof_input.py::test_input_eof_error - EOFError

============================== 1 failed in 0.09s ===============================

%

Quite a noisy error results when you run it with pytest but it fails nonetheless with an EOFError coming out from the input call. With little effort we were able simulate an EOFError. Did we really reach the End of File? No, but the program "thinks" we did (i.e. we are in the Matrix). As far as our greet function is concerned it does not know how the EOFError gets caused but the program is aware that it gets raised and it either deals with it or it doesn't. One way or the other, it is easy for us to write this test and it is a valid way to start seeing how the greet function deals with this situation. Another way to look at it is that we are essentially modeling the external dependencies of the hello world central system component.

Just to get this test to pass we can modify the test case slightly and run it again:

import pytest

def greet(hello):

name = input("Please enter your name: ")

print(f"{hello} {name}!")

def test_input_eof_error(mocker):

"""Test EOFError from input (Ctrl+D on Unix, Ctrl+Z on Windows)."""

mocker.patch('builtins.input', side_effect=EOFError)

with pytest.raises(EOFError):

greet("Hello")% pytest test_eof_input_with.py

============================= test session starts ==============================

platform darwin -- Python 3.12.11, pytest-8.4.1, pluggy-1.6.0

rootdir: ./

configfile: pyproject.toml

plugins: langsmith-0.4.29, cov-6.3.0, anyio-4.10.0, dash-3.2.0, mock-3.14.1

collected 1 item

test_eof_input_with.py . [100%]

============================== 1 passed in 0.01s ===============================

% That's great that the test passes but really we are testing that an exception can be raised and bubble up through our entire program. Really what we want to test is that the exception is dealt with in some way (if only just a log message logged and and then return False). Let's catch the exception and log a message to the logger.

import pytest

import logging

def greet(hello):

try:

name = input("Please enter your name: ")

except(EOFError) as e:

logging.error(f"EOFError {e}")

return False

print(f"{hello} {name}!")

def test_greet_eoferror_with_logging(mocker):

mock_input = mocker.patch("builtins.input",

side_effect=EOFError("EOF when reading a line"))

mock_logger = mocker.patch("logging.error")

logging.basicConfig(level=logging.ERROR)

assert greet("Hello") == False

mock_input.assert_called_once_with("Please enter your name: ")

mock_logger.assert_called_once_with("EOFError EOF when reading a line")% pytest -s test_eof_input_with_logging.py

============================= test session starts ==============================

platform darwin -- Python 3.12.11, pytest-8.4.1, pluggy-1.6.0

rootdir: ./

configfile: pyproject.toml

plugins: langsmith-0.4.29, cov-6.3.0, anyio-4.10.0, dash-3.2.0, mock-3.14.1

collected 1 item

test_eof_input_with_logging.py .

============================== 1 passed in 0.01s ===============================

% Of course, this raises the question as to what the user wants to do, do they want to exit by typing ctrl-D? Should we ask them are they sure they want to quit or do we just log it? Now one can decide what to do in this case and verify that it is done (in this case that the logging is performed)

How about for another exception?

def greet(hello):

try:

name = input("Please enter your name: ")

except(EOFError) as e:

logging.error(f"EOFError {e}")

return False

except(KeyboardInterrupt) as e:

logging.error(f"KeyboardInterrupt {e}")

return False

print(f"{hello} {name}!")

def test_greet_keyboardinterrupt_with_logging(mocker):

mock_input = mocker.patch("builtins.input",

side_effect=KeyboardInterrupt("when inputting from keyboard"))

mock_logger = mocker.patch("logging.error")

mock_print = mocker.patch("builtins.print")

logging.basicConfig(level=logging.ERROR)

assert greet("Hello") == False

mock_input.assert_called_once_with("Please enter your name: ")

mock_logger.assert_called_once_with("KeyboardInterrupt when inputting from keyboard")

mock_print.assert_not_called()============================== 4 passed in 0.02s ===============================

% pytest -s test_keyboard_input_with_logging.py

============================= test session starts ==============================

platform darwin -- Python 3.12.11, pytest-8.4.1, pluggy-1.6.0

rootdir: ./

configfile: pyproject.toml

plugins: langsmith-0.4.29, cov-6.3.0, anyio-4.10.0, dash-3.2.0, mock-3.14.1

collected 1 item

test_keyboard_input_with_logging.py .

============================== 1 passed in 0.02s ===============================

%

One has to decide if something different needs to be done in the presence of each exception. In this case we are just logging but we certainly could test if there was different exception handling code for each case.

We can do something similar with the print function call.

Let's fast forward to the whole enchilada. Just for fun, let's push it to the limit. So we have two limits. Doing nothing and doing everything very specifically. And then perhaps there is a middle, more practical, ground. We look at tall three.

So the extreme case:

import logging

import sys

import io

# Configure logging

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s',

handlers=[

logging.FileHandler('greet.log'),

logging.StreamHandler(sys.stdout)

]

)

def greet(hello):

"""

Greet a user with comprehensive exception handling and logging.

Args:

hello: Greeting message to display

"""

logger = logging.getLogger(__name__)

try:

# Validate the hello parameter

if not isinstance(hello, str):

logger.error(f"Invalid greeting type: {type(hello).__name__}. Expected string.")

hello = str(hello)

logger.info(f"Converted greeting to string: {hello}")

if not hello.strip():

logger.warning("Empty greeting provided, using default.")

hello = "Hello"

logger.info("Prompting user for name input")

# Handle input() exceptions

try:

name = input("Please enter your name: ")

logger.info(f"User input received: {name}")

# Validate name input

if not name.strip():

logger.warning("Empty name entered")

name = "Guest"

except EOFError:

logger.error("EOFError: Input stream closed unexpectedly")

name = "Guest"

print("\nNo input detected. Using default name.")

except KeyboardInterrupt:

logger.warning("KeyboardInterrupt: User cancelled input")

print("\n\nInput cancelled by user.")

sys.exit(0)

except UnicodeDecodeError as e:

logger.error(f"UnicodeDecodeError: Cannot decode input - {e}")

name = "Guest"

print(f"\nInvalid character encoding in input. Using default name.")

except RuntimeError as e:

logger.error(f"RuntimeError during input: {e}")

# Can occur if input() is called in threads or when stdin is being modified

name = "Guest"

print(f"\nRuntime error reading input. Using default name.")

except OSError as e:

logger.error(f"OSError during input: {e}")

# File descriptor issues, closed stdin, etc.

name = "Guest"

print(f"\nSystem error reading input. Using default name.")

except ValueError as e:

logger.error(f"ValueError during input: {e}")

# Rare but can occur with stdin issues

name = "Guest"

print(f"\nInvalid input format. Using default name.")

except Exception as e:

logger.error(f"Unexpected error during input: {type(e).__name__} - {e}")

name = "Guest"

print(f"\nError reading input: {e}. Using default name.")

# Handle print() exceptions

try:

greeting_message = f"{hello} {name}!"

print(greeting_message)

logger.info(f"Successfully displayed greeting: {greeting_message}")

except UnicodeEncodeError as e:

logger.error(f"UnicodeEncodeError during print: {e}")

# Terminal can't encode certain characters

safe_message = greeting_message.encode('ascii', 'replace').decode('ascii')

print(safe_message)

logger.info("Displayed greeting with ASCII fallback")

except UnicodeDecodeError as e:

logger.error(f"UnicodeDecodeError during print: {e}")

# Rare but can occur with stdout encoding issues

try:

print(greeting_message.encode('utf-8', 'replace').decode('utf-8'))

logger.info("Displayed greeting with UTF-8 fallback")

except:

logger.error("Could not print with any encoding")

except OSError as e:

logger.error(f"OSError during print: {e}")

# Output stream closed, broken pipe (SIGPIPE), disk full, etc.

logger.info(f"Could not print greeting: {greeting_message}")

except BrokenPipeError as e:

logger.error(f"BrokenPipeError during print: {e}")

# Occurs when output is piped to a command that terminates early

logger.info(f"Output pipe broken, could not complete print: {greeting_message}")

except IOError as e:

logger.error(f"IOError during print: {e}")

# General I/O errors with stdout

logger.info(f"I/O error prevented printing: {greeting_message}")

except ValueError as e:

logger.error(f"ValueError during print: {e}")

# Can occur if sys.stdout is closed

logger.info(f"Invalid output stream state: {greeting_message}")

except AttributeError as e:

logger.error(f"AttributeError during print: {e}")

# sys.stdout might be None or missing write method

logger.info(f"Output stream unavailable: {greeting_message}")

except MemoryError as e:

logger.error(f"MemoryError during print: {e}")

# Extremely large strings (unlikely here but possible)

try:

print(f"{hello} {name[:50]}!") # Truncate name

logger.warning("Printed truncated greeting due to memory constraints")

except:

logger.critical("Could not print even truncated message")

except RecursionError as e:

logger.error(f"RecursionError during print: {e}")

# Can occur if custom stdout has recursive __str__ or __repr__

logger.info(f"Recursion error prevented printing: {greeting_message}")

except Exception as e:

logger.error(f"Unexpected error during print: {type(e).__name__} - {e}")

logger.info(f"Attempted to print: {greeting_message}")

except Exception as e:

logger.critical(f"Critical error in greet function: {type(e).__name__} - {e}", exc_info=True)

try:

print("An unexpected error occurred. Please check the logs.")

except:

logger.critical("Could not print error message to user")

if __name__ == "__main__":

try:

greet("Hello")

except Exception as e:

logging.critical(f"Fatal error in main: {e}", exc_info=True)

sys.exit(1)And, get ready for it, an extreme test case also courtesy of Claude.ai, weighing in at over 700 lines of code:

import pytest

import sys

from unittest.mock import Mock, patch, MagicMock, call

from io import StringIO

# Import the greet function (assuming it's in greet.py)

# from greet import greet

from greet_full_error_handling_logging import greet

class CustomCriticalError(Exception):

"""Custom exception to test critical error paths."""

pass

class TestGreetFunction:

"""Test suite for the greet function with comprehensive exception handling."""

@pytest.fixture

def mock_logger(self, mocker):

"""Fixture to mock the logger."""

return mocker.patch('logging.getLogger')

# ===== SUCCESS CASES =====

def test_greet_normal_success(self, mocker, mock_logger):

"""Test normal successful greeting."""

mock_input = mocker.patch('builtins.input', return_value='Alice')

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet("Hello")

mock_input.assert_called_once_with("Please enter your name: ")

mock_print.assert_called_once_with("Hello Alice!")

logger_instance.info.assert_any_call("Prompting user for name input")

logger_instance.info.assert_any_call("User input received: Alice")

logger_instance.info.assert_any_call("Successfully displayed greeting: Hello Alice!")

def test_greet_empty_name_input(self, mocker, mock_logger):

"""Test handling of empty name input."""

mock_input = mocker.patch('builtins.input', return_value=' ')

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet("Hi")

mock_input.assert_called_once_with("Please enter your name: ")

mock_print.assert_called_once_with("Hi Guest!")

logger_instance.warning.assert_called_with("Empty name entered")

def test_greet_with_non_string_hello(self, mocker, mock_logger):

"""Test greeting parameter type conversion."""

mock_input = mocker.patch('builtins.input', return_value='Bob')

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet(123)

mock_input.assert_called_once_with("Please enter your name: ")

mock_print.assert_called_once_with("123 Bob!")

logger_instance.error.assert_called_with("Invalid greeting type: int. Expected string.")

logger_instance.info.assert_any_call("Converted greeting to string: 123")

def test_greet_with_empty_hello(self, mocker, mock_logger):

"""Test empty greeting parameter."""

mock_input = mocker.patch('builtins.input', return_value='Charlie')

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet(" ")

mock_input.assert_called_once_with("Please enter your name: ")

mock_print.assert_called_once_with("Hello Charlie!")

logger_instance.warning.assert_called_with("Empty greeting provided, using default.")

# ===== INPUT EXCEPTION CASES =====

def test_greet_input_eoferror(self, mocker, mock_logger):

"""Test EOFError during input - greeting still printed with Guest."""

mock_input = mocker.patch('builtins.input', side_effect=EOFError())

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is called twice: error message + greeting

logger_instance.error.assert_any_call("EOFError: Input stream closed unexpectedly")

assert mock_print.call_count == 2

mock_print.assert_any_call("\nNo input detected. Using default name.")

mock_print.assert_any_call("Hello Guest!")

def test_greet_input_keyboard_interrupt(self, mocker, mock_logger):

"""Test KeyboardInterrupt during input - no greeting printed, exits."""

mock_input = mocker.patch('builtins.input', side_effect=KeyboardInterrupt())

mock_print = mocker.patch('builtins.print')

# Mock sys.exit to raise SystemExit so we can catch it

def exit_side_effect(code):

raise SystemExit(code)

mock_exit = mocker.patch('sys.exit', side_effect=exit_side_effect)

logger_instance = mock_logger.return_value

# KeyboardInterrupt causes sys.exit which raises SystemExit

# The outer exception handler catches this

with pytest.raises(SystemExit) as exc_info:

greet("Hello")

# Verify it was attempting to exit with 0

assert exc_info.value.code == 0

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Cancellation message is printed

logger_instance.warning.assert_called_with("KeyboardInterrupt: User cancelled input")

mock_print.assert_any_call("\n\nInput cancelled by user.")

mock_exit.assert_called_once_with(0)

def test_greet_input_unicode_decode_error(self, mocker, mock_logger):

"""Test UnicodeDecodeError during input - greeting still printed with Guest."""

mock_input = mocker.patch('builtins.input',

side_effect=UnicodeDecodeError('utf-8', b'\x80', 0, 1, 'invalid'))

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is called twice: error message + greeting

assert any('UnicodeDecodeError' in str(call) for call in logger_instance.error.call_args_list)

assert mock_print.call_count == 2

mock_print.assert_any_call("\nInvalid character encoding in input. Using default name.")

mock_print.assert_any_call("Hello Guest!")

def test_greet_input_runtime_error(self, mocker, mock_logger):

"""Test RuntimeError during input - greeting still printed with Guest."""

mock_input = mocker.patch('builtins.input', side_effect=RuntimeError("Input error"))

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is called twice: error message + greeting

logger_instance.error.assert_any_call("RuntimeError during input: Input error")

assert mock_print.call_count == 2

mock_print.assert_any_call("\nRuntime error reading input. Using default name.")

mock_print.assert_any_call("Hello Guest!")

def test_greet_input_os_error(self, mocker, mock_logger):

"""Test OSError during input - greeting still printed with Guest."""

mock_input = mocker.patch('builtins.input', side_effect=OSError("File descriptor error"))

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is called twice: error message + greeting

logger_instance.error.assert_any_call("OSError during input: File descriptor error")

assert mock_print.call_count == 2

mock_print.assert_any_call("\nSystem error reading input. Using default name.")

mock_print.assert_any_call("Hello Guest!")

def test_greet_input_value_error(self, mocker, mock_logger):

"""Test ValueError during input - greeting still printed with Guest."""

mock_input = mocker.patch('builtins.input', side_effect=ValueError("Invalid value"))

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is called twice: error message + greeting

logger_instance.error.assert_any_call("ValueError during input: Invalid value")

assert mock_print.call_count == 2

mock_print.assert_any_call("\nInvalid input format. Using default name.")

mock_print.assert_any_call("Hello Guest!")

def test_greet_input_unexpected_exception(self, mocker, mock_logger):

"""Test unexpected exception during input - greeting still printed with Guest."""

mock_input = mocker.patch('builtins.input', side_effect=Exception("Unexpected error"))

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is called twice: error message + greeting

assert any('Unexpected error during input' in str(call)

for call in logger_instance.error.call_args_list)

assert mock_print.call_count == 2

mock_print.assert_any_call("Hello Guest!")

# ===== PRINT EXCEPTION CASES =====

def test_greet_print_unicode_encode_error(self, mocker, mock_logger):

"""Test UnicodeEncodeError during print."""

mock_input = mocker.patch('builtins.input', return_value='José')

mock_print = mocker.patch('builtins.print')

mock_print.side_effect = [

UnicodeEncodeError('ascii', 'José', 0, 1, 'ordinal not in range'),

None # Second call succeeds (fallback)

]

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted twice (original + fallback)

logger_instance.error.assert_any_call(

mocker.ANY # Match any UnicodeEncodeError message

)

logger_instance.info.assert_any_call("Displayed greeting with ASCII fallback")

assert mock_print.call_count == 2

def test_greet_print_unicode_decode_error(self, mocker, mock_logger):

"""Test UnicodeDecodeError during print."""

mock_input = mocker.patch('builtins.input', return_value='Dave')

mock_print = mocker.patch('builtins.print')

mock_print.side_effect = [

UnicodeDecodeError('utf-8', b'\x80', 0, 1, 'invalid'),

None # Second call succeeds (fallback)

]

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted twice (original + fallback)

assert any('UnicodeDecodeError during print' in str(call)

for call in logger_instance.error.call_args_list)

logger_instance.info.assert_any_call("Displayed greeting with UTF-8 fallback")

assert mock_print.call_count == 2

def test_greet_print_os_error(self, mocker, mock_logger):

"""Test OSError during print - greeting not displayed but logged."""

mock_input = mocker.patch('builtins.input', return_value='Eve')

mock_print = mocker.patch('builtins.print', side_effect=OSError("Output error"))

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted once but fails

mock_print.assert_called_once_with("Hello Eve!")

logger_instance.error.assert_any_call("OSError during print: Output error")

logger_instance.info.assert_any_call("Could not print greeting: Hello Eve!")

def test_greet_print_broken_pipe_error(self, mocker, mock_logger):

"""Test BrokenPipeError during print - caught as OSError since it's a subclass."""

mock_input = mocker.patch('builtins.input', return_value='Frank')

mock_print = mocker.patch('builtins.print', side_effect=BrokenPipeError("Pipe broken"))

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted once but fails

mock_print.assert_called_once_with("Hello Frank!")

# BrokenPipeError is caught by OSError handler (it's a subclass)

# So we check for OSError in the log, not BrokenPipeError

error_calls = [str(call) for call in logger_instance.error.call_args_list]

assert any("OSError during print" in call or "BrokenPipeError during print" in call

for call in error_calls)

def test_greet_print_io_error(self, mocker, mock_logger):

"""Test IOError during print - caught as OSError since IOError is an alias."""

mock_input = mocker.patch('builtins.input', return_value='Grace')

mock_print = mocker.patch('builtins.print', side_effect=IOError("I/O error"))

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted once but fails

mock_print.assert_called_once_with("Hello Grace!")

# IOError is essentially OSError in Python 3

error_calls = [str(call) for call in logger_instance.error.call_args_list]

assert any("OSError during print" in call or "IOError during print" in call

for call in error_calls)

def test_greet_print_value_error(self, mocker, mock_logger):

"""Test ValueError during print - greeting not displayed but logged."""

mock_input = mocker.patch('builtins.input', return_value='Henry')

mock_print = mocker.patch('builtins.print', side_effect=ValueError("Invalid output"))

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted once but fails

mock_print.assert_called_once_with("Hello Henry!")

logger_instance.error.assert_any_call("ValueError during print: Invalid output")

logger_instance.info.assert_any_call("Invalid output stream state: Hello Henry!")

def test_greet_print_attribute_error(self, mocker, mock_logger):

"""Test AttributeError during print - greeting not displayed but logged."""

mock_input = mocker.patch('builtins.input', return_value='Iris')

mock_print = mocker.patch('builtins.print', side_effect=AttributeError("No write method"))

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted once but fails

mock_print.assert_called_once_with("Hello Iris!")

logger_instance.error.assert_any_call("AttributeError during print: No write method")

logger_instance.info.assert_any_call("Output stream unavailable: Hello Iris!")

def test_greet_print_memory_error(self, mocker, mock_logger):

"""Test MemoryError during print with successful truncation."""

mock_input = mocker.patch('builtins.input', return_value='Jack')

mock_print = mocker.patch('builtins.print')

mock_print.side_effect = [

MemoryError("Out of memory"),

None # Second call succeeds (truncated)

]

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted twice (original + truncated fallback)

logger_instance.error.assert_any_call("MemoryError during print: Out of memory")

logger_instance.warning.assert_any_call(

"Printed truncated greeting due to memory constraints"

)

assert mock_print.call_count == 2

def test_greet_print_memory_error_complete_failure(self, mocker, mock_logger):

"""Test MemoryError during print with complete failure."""

mock_input = mocker.patch('builtins.input', return_value='Kate')

mock_print = mocker.patch('builtins.print', side_effect=MemoryError("Out of memory"))

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted twice (original + truncated), both fail

assert mock_print.call_count == 2

logger_instance.error.assert_any_call("MemoryError during print: Out of memory")

logger_instance.critical.assert_any_call("Could not print even truncated message")

def test_greet_print_recursion_error(self, mocker, mock_logger):

"""Test RecursionError during print - greeting not displayed but logged."""

mock_input = mocker.patch('builtins.input', return_value='Liam')

mock_print = mocker.patch('builtins.print', side_effect=RecursionError("Max recursion"))

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted once but fails

mock_print.assert_called_once_with("Hello Liam!")

logger_instance.error.assert_any_call("RecursionError during print: Max recursion")

logger_instance.info.assert_any_call("Recursion error prevented printing: Hello Liam!")

def test_greet_print_unexpected_exception(self, mocker, mock_logger):

"""Test unexpected exception during print - greeting not displayed but logged."""

mock_input = mocker.patch('builtins.input', return_value='Mia')

mock_print = mocker.patch('builtins.print', side_effect=Exception("Unknown error"))

logger_instance = mock_logger.return_value

greet("Hello")

# Input is still attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted once but fails

mock_print.assert_called_once_with("Hello Mia!")

assert any('Unexpected error during print' in str(call)

for call in logger_instance.error.call_args_list)

logger_instance.info.assert_any_call("Attempted to print: Hello Mia!")

# ===== CRITICAL ERROR CASES =====

def test_greet_critical_outer_exception(self, mocker, mock_logger):

"""Test critical exception in outer try block."""

mock_input = mocker.patch('builtins.input', return_value='Test')

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

# Make the first logger.info call raise a critical error

# This happens early enough to be caught by the outer handler

logger_instance.info.side_effect = [

CustomCriticalError("Critical failure in logging"),

None,

None

]

greet("Hello")

# Verify critical error was logged

critical_calls = [str(call) for call in logger_instance.critical.call_args_list]

assert len(critical_calls) > 0, "Expected critical error to be logged"

assert any('Critical error in greet function' in str(call)

for call in critical_calls)

# Verify error message was printed to user

print_calls = [str(call) for call in mock_print.call_args_list]

assert any("An unexpected error occurred" in str(call)

for call in print_calls)

def test_greet_critical_error_print_fails(self, mocker, mock_logger):

"""Test critical error where even error message print fails."""

mock_input = mocker.patch('builtins.input', return_value='Test')

mock_print = mocker.patch('builtins.print', side_effect=OSError("Print failed"))

logger_instance = mock_logger.return_value

# Make logger raise a critical error

logger_instance.info.side_effect = CustomCriticalError("Critical failure")

greet("Hello")

# Verify both critical errors were logged

critical_calls = [str(call) for call in logger_instance.critical.call_args_list]

assert len(critical_calls) >= 1, "Expected critical errors to be logged"

# Should log about the critical error and optionally about print failure

assert any('Critical error in greet function' in str(call)

for call in critical_calls)

# Verify print was attempted

assert mock_print.call_count >= 1

def test_greet_exception_during_greeting_construction(self, mocker, mock_logger):

"""Test exception during f-string formatting of greeting."""

mock_input = mocker.patch('builtins.input')

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

# Make input return an object that has strip() but fails during __str__

class BadStringObject:

def strip(self):

return self # Pass the strip check

def __str__(self):

raise CustomCriticalError("String conversion failed")

def __bool__(self):

return True # Ensure it's not treated as empty

mock_input.return_value = BadStringObject()

greet("Hello")

# The exception during f-string formatting should be caught

# Check if it was logged as error or critical

error_calls = [str(call) for call in logger_instance.error.call_args_list]

critical_calls = [str(call) for call in logger_instance.critical.call_args_list]

# The error could be caught by either handler

has_error = any("String conversion failed" in call or "Critical error" in call

for call in error_calls + critical_calls)

assert has_error or len(critical_calls) > 0, \

f"Expected error to be logged. Errors: {error_calls}, Critical: {critical_calls}"

# In TestGreetEdgeCases class:

def test_greet_input_exception_then_print_exception(self, mocker):

"""Test when both input and print fail."""

mock_logger = mocker.patch('logging.getLogger')

mock_input = mocker.patch('builtins.input', side_effect=EOFError())

mock_print = mocker.patch('builtins.print', side_effect=OSError("Output error"))

logger_instance = mock_logger.return_value

greet("Hello")

# Input is attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted twice (error message + greeting attempt), both fail

assert mock_print.call_count == 2

# The EOFError from input should be logged

error_calls = [str(call) for call in logger_instance.error.call_args_list]

assert any("EOFError" in call for call in error_calls), \

f"Expected EOFError in logs. Got: {error_calls}"

# When print fails in the exception handler, it may not get logged separately

# because it happens within the exception handling code itself.

# So we just verify that print was attempted twice (which we already did above)

# The second print failure might be caught by the outer exception handler

# or might not be logged at all if it happens in exception handling code

# Verify that the function completed without crashing

assert mock_input.call_count == 1

assert mock_print.call_count == 2

def test_greet_input_exception_then_print_exception2(self, mocker):

"""Test when both input and print fail."""

mock_logger = mocker.patch('logging.getLogger')

mock_input = mocker.patch('builtins.input', side_effect=EOFError())

mock_print = mocker.patch('builtins.print', side_effect=OSError("Output error"))

logger_instance = mock_logger.return_value

greet("Hello")

# Input is attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted twice (error message + greeting attempt), both fail

assert mock_print.call_count == 2, \

f"Expected print to be called twice. Called {mock_print.call_count} times"

# The EOFError from input should be logged

error_calls = [str(call) for call in logger_instance.error.call_args_list]

assert any("EOFError" in call for call in error_calls), \

f"Expected EOFError in logs. Got: {error_calls}"

# When both input and print fail, the function should handle it gracefully

# The print failure during the exception handler might get logged to info

# since the greeting message that couldn't be printed is logged there

info_calls = [str(call) for call in logger_instance.info.call_args_list]

all_logs = error_calls + info_calls

# Verify the greeting was attempted to be logged even if print failed

assert any("Guest" in call for call in all_logs) or mock_print.call_count == 2, \

f"Expected attempt to greet Guest. Errors: {error_calls}, Info: {info_calls}"

def test_greet_input_exception_then_print_exception3(self, mocker):

"""Test when both input and print fail."""

mock_logger = mocker.patch('logging.getLogger')

mock_input = mocker.patch('builtins.input', side_effect=EOFError())

mock_print = mocker.patch('builtins.print', side_effect=OSError("Output error"))

logger_instance = mock_logger.return_value

greet("Hello")

# Input is attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Print is attempted twice (error message from EOFError handler + greeting), both fail

assert mock_print.call_count == 2, \

f"Expected print to be called twice. Called {mock_print.call_count} times"

# The EOFError from input should be logged

error_calls = [str(call) for call in logger_instance.error.call_args_list]

assert any("EOFError" in call for call in error_calls), \

f"Expected EOFError in logs. Got: {error_calls}"

# When print fails, it gets caught by the print exception handlers

# Check if OSError was logged (either from first or second print failure)

info_calls = [str(call) for call in logger_instance.info.call_args_list]

has_print_error = any("OSError" in call for call in error_calls + info_calls)

# At minimum, we know the function handled both failures without crashing

assert mock_input.call_count == 1

assert mock_print.call_count == 2

# ===== INTEGRATION TESTS =====

class TestGreetIntegration:

"""Integration tests without extensive mocking."""

def test_greet_real_execution(self, mocker):

"""Test greet with minimal mocking to verify real execution path."""

mock_logger = mocker.patch('logging.getLogger')

mock_input = mocker.patch('builtins.input', return_value='TestUser')

# Capture actual print output

captured_output = StringIO()

mocker.patch('sys.stdout', captured_output)

greet("Greetings")

mock_input.assert_called_once_with("Please enter your name: ")

output = captured_output.getvalue()

assert "Greetings TestUser!" in output

# ===== PARAMETRIZED TESTS =====

class TestGreetParametrized:

"""Parametrized tests for multiple scenarios."""

@pytest.mark.parametrize("greeting,name,expected", [

("Hello", "Alice", "Hello Alice!"),

("Hi", "Bob", "Hi Bob!"),

("Hey", "Charlie", "Hey Charlie!"),

("Greetings", "Dave", "Greetings Dave!"),

])

def test_greet_various_inputs(self, mocker, greeting, name, expected):

"""Test various greeting and name combinations."""

mock_logger = mocker.patch('logging.getLogger')

mock_input = mocker.patch('builtins.input', return_value=name)

mock_print = mocker.patch('builtins.print')

greet(greeting)

mock_input.assert_called_once_with("Please enter your name: ")

mock_print.assert_any_call(expected)

@pytest.mark.parametrize("exception_class,exception_arg,error_message,continues_to_greet", [

(EOFError, "", "EOFError: Input stream closed unexpectedly", True),

(RuntimeError, "Test error", "RuntimeError during input:", True),

(OSError, "Test error", "OSError during input:", True),

(ValueError, "Test error", "ValueError during input:", True),

])

def test_greet_input_exceptions_parametrized(self, mocker, exception_class,

exception_arg, error_message,

continues_to_greet):

"""Test various input exceptions and verify if greeting continues."""

mock_logger = mocker.patch('logging.getLogger')

# Handle exceptions with or without arguments

if exception_arg:

mock_input = mocker.patch('builtins.input', side_effect=exception_class(exception_arg))

else:

mock_input = mocker.patch('builtins.input', side_effect=exception_class())

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet("Hello")

# Input is always attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Check that appropriate error was logged

error_calls = [str(call) for call in logger_instance.error.call_args_list]

assert any(error_message in call for call in error_calls)

# Verify greeting was printed with "Guest"

greeting_calls = [str(c) for c in mock_print.call_args_list

if 'Hello Guest!' in str(c)]

assert len(greeting_calls) > 0, f"{exception_class.__name__} should continue to greet"

@pytest.mark.parametrize("exception_class,exception_arg,error_log_key,attempts_fallback", [

(OSError, "Output error", "OSError during print:", False),

# BrokenPipeError and IOError are subclasses of OSError, so they'll be caught as OSError

(ValueError, "Invalid output", "ValueError during print:", False),

(AttributeError, "No write", "AttributeError during print:", False),

(RecursionError, "Max recursion", "RecursionError during print:", False),

(UnicodeEncodeError, ('ascii', 'test', 0, 1, 'ordinal'), "UnicodeEncodeError", True),

])

def test_greet_print_exceptions_parametrized(self, mocker, exception_class,

exception_arg, error_log_key,

attempts_fallback):

"""Test various print exceptions."""

mock_logger = mocker.patch('logging.getLogger')

mock_input = mocker.patch('builtins.input', return_value='TestUser')

mock_print = mocker.patch('builtins.print')

# Handle exceptions with different argument types

if isinstance(exception_arg, tuple):

mock_print.side_effect = [exception_class(*exception_arg), None]

else:

mock_print.side_effect = exception_class(exception_arg)

logger_instance = mock_logger.return_value

greet("Hello")

# Input is always attempted

mock_input.assert_called_once_with("Please enter your name: ")

# Check that appropriate error was logged

error_calls = [str(call) for call in logger_instance.error.call_args_list]

assert any(error_log_key in call for call in error_calls), \

f"Expected '{error_log_key}' in error logs. Got: {error_calls}"

# Verify print behavior

if attempts_fallback:

# Should attempt print twice (original + fallback)

assert mock_print.call_count == 2

else:

# Should attempt print once and fail

assert mock_print.call_count == 1

# ===== EDGE CASE TESTS =====

class TestGreetEdgeCases:

"""Test edge cases and boundary conditions."""

def test_greet_with_none_hello(self, mocker):

"""Test with None as greeting parameter."""

mock_logger = mocker.patch('logging.getLogger')

mock_input = mocker.patch('builtins.input', return_value='User')

mock_print = mocker.patch('builtins.print')

logger_instance = mock_logger.return_value

greet(None)

mock_input.assert_called_once_with("Please enter your name: ")

# None converted to string "None"

mock_print.assert_called_once_with("None User!")

logger_instance.error.assert_any_call("Invalid greeting type: NoneType. Expected string.")

def test_greet_unicode_in_name_and_greeting(self, mocker):

"""Test with Unicode characters in both greeting and name."""

mock_logger = mocker.patch('logging.getLogger')

mock_input = mocker.patch('builtins.input', return_value='José')

mock_print = mocker.patch('builtins.print')

greet("¡Hola")

mock_input.assert_called_once_with("Please enter your name: ")

mock_print.assert_called_once_with("¡Hola José!")% pytest test_greet_full.py -v

============================= test session starts ==============================

platform darwin -- Python 3.12.11, pytest-8.4.1, pluggy-1.6.0 -- python3

cachedir: .pytest_cache

rootdir: ./

configfile: pyproject.toml

plugins: langsmith-0.4.29, cov-6.3.0, anyio-4.10.0, dash-3.2.0, mock-3.14.1

collected 44 items

test_greet_full.py::TestGreetFunction::test_greet_normal_success PASSED [ 2%]

test_greet_full.py::TestGreetFunction::test_greet_empty_name_input PASSED [ 4%]

test_greet_full.py::TestGreetFunction::test_greet_with_non_string_hello PASSED [ 6%]

test_greet_full.py::TestGreetFunction::test_greet_with_empty_hello PASSED [ 9%]

test_greet_full.py::TestGreetFunction::test_greet_input_eoferror PASSED [ 11%]

test_greet_full.py::TestGreetFunction::test_greet_input_keyboard_interrupt PASSED [ 13%]

test_greet_full.py::TestGreetFunction::test_greet_input_unicode_decode_error PASSED [ 15%]

test_greet_full.py::TestGreetFunction::test_greet_input_runtime_error PASSED [ 18%]

test_greet_full.py::TestGreetFunction::test_greet_input_os_error PASSED [ 20%]

test_greet_full.py::TestGreetFunction::test_greet_input_value_error PASSED [ 22%]

test_greet_full.py::TestGreetFunction::test_greet_input_unexpected_exception PASSED [ 25%]

test_greet_full.py::TestGreetFunction::test_greet_print_unicode_encode_error PASSED [ 27%]

test_greet_full.py::TestGreetFunction::test_greet_print_unicode_decode_error PASSED [ 29%]

test_greet_full.py::TestGreetFunction::test_greet_print_os_error PASSED [ 31%]

test_greet_full.py::TestGreetFunction::test_greet_print_broken_pipe_error PASSED [ 34%]

test_greet_full.py::TestGreetFunction::test_greet_print_io_error PASSED [ 36%]

test_greet_full.py::TestGreetFunction::test_greet_print_value_error PASSED [ 38%]

test_greet_full.py::TestGreetFunction::test_greet_print_attribute_error PASSED [ 40%]

test_greet_full.py::TestGreetFunction::test_greet_print_memory_error PASSED [ 43%]

test_greet_full.py::TestGreetFunction::test_greet_print_memory_error_complete_failure PASSED [ 45%]

test_greet_full.py::TestGreetFunction::test_greet_print_recursion_error PASSED [ 47%]

test_greet_full.py::TestGreetFunction::test_greet_print_unexpected_exception PASSED [ 50%]

test_greet_full.py::TestGreetFunction::test_greet_critical_outer_exception PASSED [ 52%]

test_greet_full.py::TestGreetFunction::test_greet_critical_error_print_fails PASSED [ 54%]

test_greet_full.py::TestGreetFunction::test_greet_exception_during_greeting_construction PASSED [ 56%]

test_greet_full.py::TestGreetFunction::test_greet_input_exception_then_print_exception PASSED [ 59%]

test_greet_full.py::TestGreetFunction::test_greet_input_exception_then_print_exception2 PASSED [ 61%]

test_greet_full.py::TestGreetFunction::test_greet_input_exception_then_print_exception3 PASSED [ 63%]

test_greet_full.py::TestGreetIntegration::test_greet_real_execution PASSED [ 65%]

test_greet_full.py::TestGreetParametrized::test_greet_various_inputs[Hello-Alice-Hello Alice!] PASSED [ 68%]

test_greet_full.py::TestGreetParametrized::test_greet_various_inputs[Hi-Bob-Hi Bob!] PASSED [ 70%]

test_greet_full.py::TestGreetParametrized::test_greet_various_inputs[Hey-Charlie-Hey Charlie!] PASSED [ 72%]

test_greet_full.py::TestGreetParametrized::test_greet_various_inputs[Greetings-Dave-Greetings Dave!] PASSED [ 75%]

test_greet_full.py::TestGreetParametrized::test_greet_input_exceptions_parametrized[EOFError--EOFError: Input stream closed unexpectedly-True] PASSED [ 77%]

test_greet_full.py::TestGreetParametrized::test_greet_input_exceptions_parametrized[RuntimeError-Test error-RuntimeError during input:-True] PASSED [ 79%]

test_greet_full.py::TestGreetParametrized::test_greet_input_exceptions_parametrized[OSError-Test error-OSError during input:-True] PASSED [ 81%]

test_greet_full.py::TestGreetParametrized::test_greet_input_exceptions_parametrized[ValueError-Test error-ValueError during input:-True] PASSED [ 84%]

test_greet_full.py::TestGreetParametrized::test_greet_print_exceptions_parametrized[OSError-Output error-OSError during print:-False] PASSED [ 86%]

test_greet_full.py::TestGreetParametrized::test_greet_print_exceptions_parametrized[ValueError-Invalid output-ValueError during print:-False] PASSED [ 88%]

test_greet_full.py::TestGreetParametrized::test_greet_print_exceptions_parametrized[AttributeError-No write-AttributeError during print:-False] PASSED [ 90%]

test_greet_full.py::TestGreetParametrized::test_greet_print_exceptions_parametrized[RecursionError-Max recursion-RecursionError during print:-False] PASSED [ 93%]

test_greet_full.py::TestGreetParametrized::test_greet_print_exceptions_parametrized[UnicodeEncodeError-exception_arg4-UnicodeEncodeError-True] PASSED [ 95%]

test_greet_full.py::TestGreetEdgeCases::test_greet_with_none_hello PASSED [ 97%]

test_greet_full.py::TestGreetEdgeCases::test_greet_unicode_in_name_and_greeting PASSED [100%]

============================== 44 passed in 0.10s ==============================

%

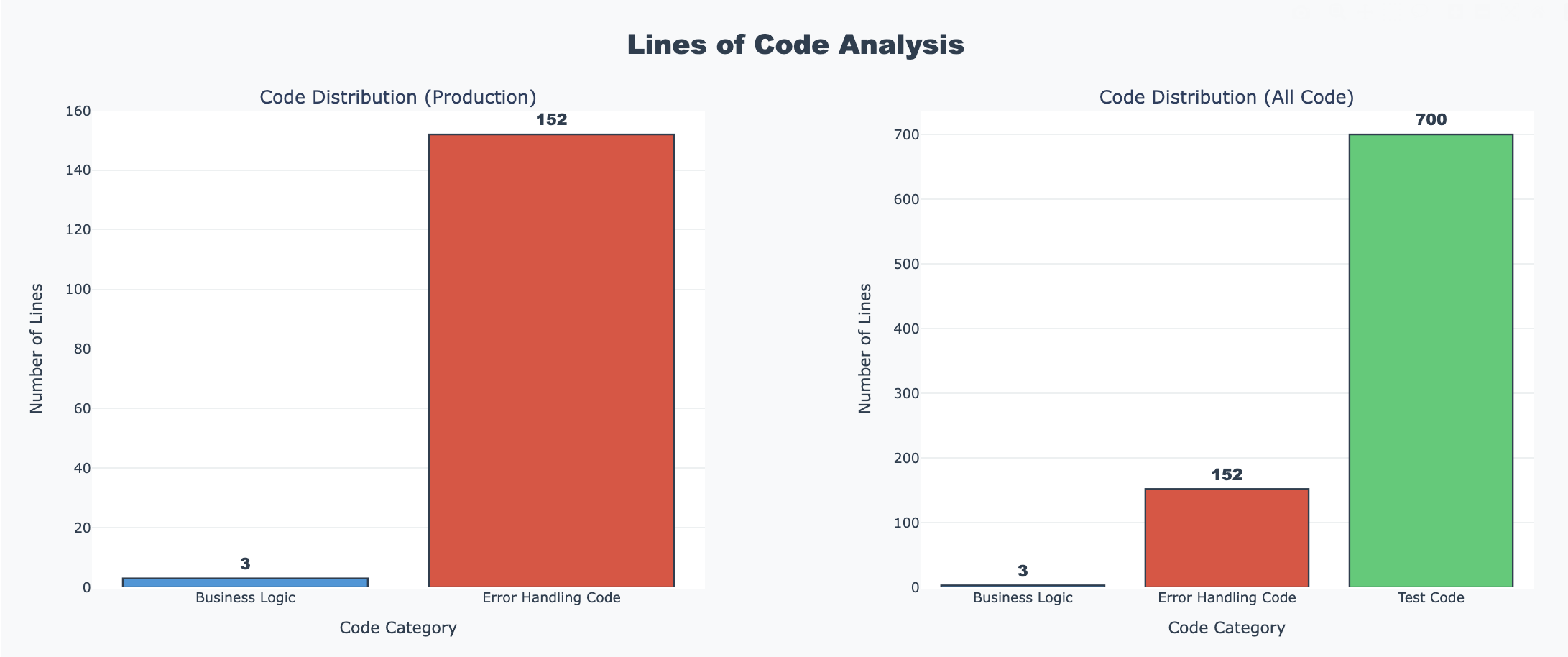

Wow, the ratios of lines of code of business logic code to lines of code for error handling and then again compared to the lines of applicable test code:

Yes. It's an extreme case but wow, a ratios of 3:152:700 for business logic:error handling code:test case code.

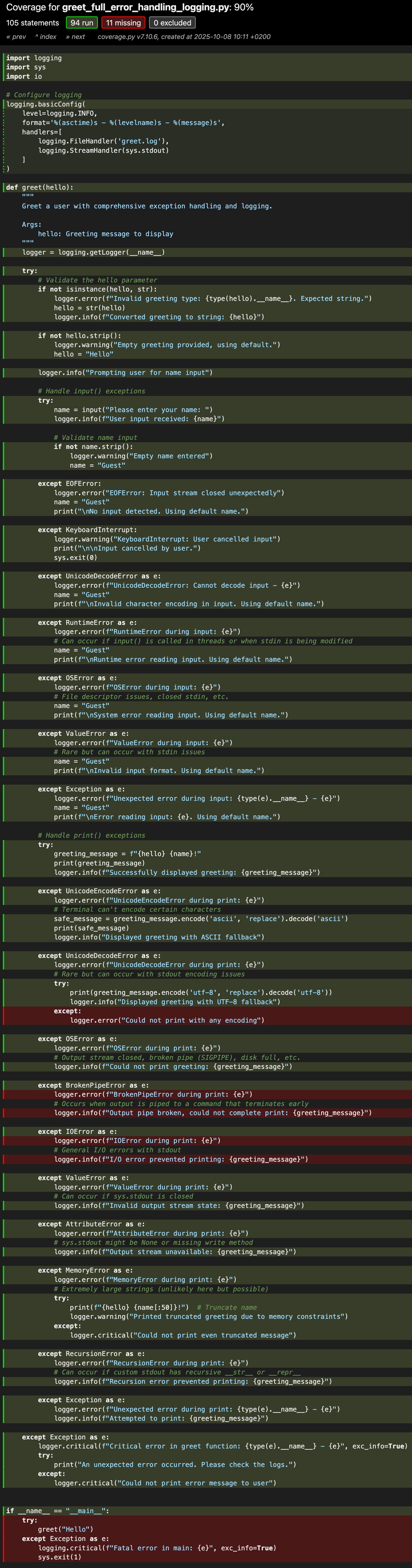

Just for fun, let's check coverage (scrollable image)

We could also try a more middle-of-the-road approach:

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

def greet(hello):

"""Simple greeting function with basic error handling."""

try:

name = input("Please enter your name: ")

if not name.strip():

name = "Guest"

print(f"{hello} {name}!")

except KeyboardInterrupt:

print("\n\nGoodbye!")

return

except EOFError:

print("\nNo input detected.")

return

except Exception as e:

logger.error(f"Unexpected error: {e}")

print("Sorry, something went wrong!")