Tests for Cloudflare problem?

Along the lines of my most recent article in which I made a mountain out of a molehill with testing a "Hello world!" program. Perhaps some of it is more applicable than it initially appears. Perhaps.

Claude and I were discussing the Cloudflare problem :) It is not my intention to fish in troubled waters. I think it makes for some good discussion and is related to my last blog post. So here goes.

We got to talking about the simplest Rust use of the unwrap() function that would cause the panic problem and how test cases might have assisted in preventing this.

A simple Rust program that uses unwrap():

fn main() {

let text = "abc";

let number = text.parse::<i32>().unwrap(); // 💥 PANIC!

println!("The number is: {}", number); // Never executes

}and when you run it:

%cargo run

Compiling ...

Running `target/debug/simple_hello_panic`

thread 'main' (231251465) panicked at src/main.rs:3:38:

called `Result::unwrap()` on an `Err` value: ParseIntError { kind: InvalidDigit }

simple_hello_panic % Like we did with the Hello World greet program we could parameterize it and add some test cases.

// UNSAFE version - uses unwrap, will panic on invalid input

fn parse_number_unsafe(text: &str) -> i32 {

println!("Parsing '{}' (unsafe version)", text);

let number = text.parse::<i32>().unwrap();

println!("Successfully parsed: {}", number);

number

}

// SAFE version - returns Result, handles errors gracefully

fn parse_number_safe(text: &str) -> Result<i32, String> {

println!("Parsing '{}' (safe version)", text);

match text.parse::<i32>() {

Ok(number) => {

println!("Successfully parsed: {}", number);

Ok(number)

}

Err(e) => {

println!("Parse failed: {}", e);

Err(format!("Could not parse '{}': {}", text, e))

}

}

}

fn main() {

let test_inputs = vec!["42", "abc", "-10", "999"];

println!("=== Testing SAFE version ===");

for input in &test_inputs {

match parse_number_safe(input) {

Ok(num) => println!("✅ Got: {}\n", num),

Err(e) => println!("❌ Error: {}\n", e),

}

}

println!("\n=== Testing UNSAFE version ===");

println!("⚠️ This will crash on first invalid input!\n");

// Uncomment to see the crash:

for input in &test_inputs {

let num = parse_number_unsafe(input);

println!("✅ Got: {}\n", num);

}

}

#[cfg(test)]

mod tests {

use super::*;

// ============================================

// Tests for SAFE version - all pass

// ============================================

#[test]

fn test_safe_valid_positive() {

let result = parse_number_safe("42");

assert!(result.is_ok());

assert_eq!(result.unwrap(), 42);

}

#[test]

fn test_safe_valid_negative() {

let result = parse_number_safe("-10");

assert!(result.is_ok());

assert_eq!(result.unwrap(), -10);

}

#[test]

fn test_safe_valid_zero() {

let result = parse_number_safe("0");

assert!(result.is_ok());

assert_eq!(result.unwrap(), 0);

}

#[test]

fn test_safe_invalid_letters() {

let result = parse_number_safe("abc");

assert!(result.is_err());

assert!(result.unwrap_err().contains("Could not parse"));

}

#[test]

fn test_safe_invalid_float() {

let result = parse_number_safe("3.14");

assert!(result.is_err());

}

#[test]

fn test_safe_invalid_empty() {

let result = parse_number_safe("");

assert!(result.is_err());

}

#[test]

fn test_safe_invalid_mixed() {

let result = parse_number_safe("12abc");

assert!(result.is_err());

}

// ============================================

// Tests for UNSAFE version - some panic

// ============================================

#[test]

fn test_unsafe_valid_positive() {

let result = parse_number_unsafe("42");

assert_eq!(result, 42);

}

#[test]

fn test_unsafe_valid_negative() {

let result = parse_number_unsafe("-10");

assert_eq!(result, -10);

}

#[test]

fn test_unsafe_valid_zero() {

let result = parse_number_unsafe("0");

assert_eq!(result, 0);

}

#[test]

#[should_panic(expected = "called `Result::unwrap()` on an `Err` value")]

fn test_unsafe_invalid_letters_panics() {

parse_number_unsafe("abc"); // PANICS!

}

#[test]

#[should_panic(expected = "called `Result::unwrap()` on an `Err` value")]

fn test_unsafe_invalid_float_panics() {

parse_number_unsafe("3.14"); // PANICS!

}

#[test]

#[should_panic(expected = "called `Result::unwrap()` on an `Err` value")]

fn test_unsafe_invalid_empty_panics() {

parse_number_unsafe(""); // PANICS!

}

#[test]

#[should_panic(expected = "called `Result::unwrap()` on an `Err` value")]

fn test_unsafe_invalid_mixed_panics() {

parse_number_unsafe("12abc"); // PANICS!

}

}We can run it via the main in this new file:

% cargo run

Finished `dev` profile [unoptimized + debuginfo] target(s) in 0.03s

Running `target/debug/hello_parameterized`

=== Testing SAFE version ===

Parsing '42' (safe version)

Successfully parsed: 42

✅ Got: 42

Parsing 'abc' (safe version)

Parse failed: invalid digit found in string

❌ Error: Could not parse 'abc': invalid digit found in string

Parsing '-10' (safe version)

Successfully parsed: -10

✅ Got: -10

Parsing '999' (safe version)

Successfully parsed: 999

✅ Got: 999

=== Testing UNSAFE version ===

⚠️ This will crash on first invalid input!

Parsing '42' (unsafe version)

Successfully parsed: 42

✅ Got: 42

Parsing 'abc' (unsafe version)

thread 'main' (231327405) panicked at src/main.rs:4:38:

called `Result::unwrap()` on an `Err` value: ParseIntError { kind: InvalidDigit }

%

And we can run the tests that show (and expect the panicked scenario):

cargo test

Compiling hello_parameterized v0.1.0 (hello_parameterized)

Finished `test` profile [unoptimized + debuginfo] target(s) in 0.58s

Running unittests src/main.rs (target/debug/deps/hello_parameterized-558d1d3e26fec6d5)

running 14 tests

test tests::test_safe_invalid_empty ... ok

test tests::test_safe_invalid_letters ... ok

test tests::test_safe_valid_zero ... ok

test tests::test_safe_invalid_float ... ok

test tests::test_safe_valid_positive ... ok

test tests::test_safe_valid_negative ... ok

test tests::test_safe_invalid_mixed ... ok

test tests::test_unsafe_valid_negative ... ok

test tests::test_unsafe_valid_positive ... ok

test tests::test_unsafe_invalid_empty_panics - should panic ... ok

test tests::test_unsafe_invalid_letters_panics - should panic ... ok

test tests::test_unsafe_invalid_float_panics - should panic ... ok

test tests::test_unsafe_invalid_mixed_panics - should panic ... ok

test tests::test_unsafe_valid_zero ... ok

test result: ok. 14 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

hello_parameterized %

Bottom line: don't use unwrap() in production code. But if you do, you can still test it (and then in working through it you won't use it anymore)

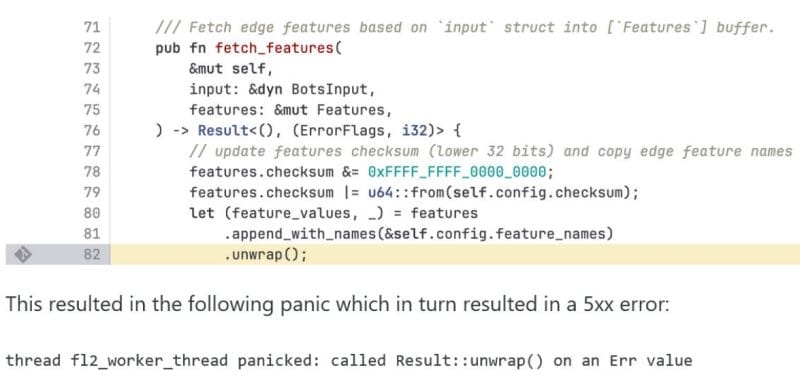

Cloudflare was nice enough to publish the offending code and provide an explanation:

There is some function chaining here with append_with_names(&self.config.feature_names).unwrap() What's out of sight is the append_with_names() function that handled the config file that got too big and this function returned Err and the following unwrap() panicked.

Really, append_with_names() is an indirect input to the code above, just like the input function call was in our greet program the Hello World article.

Guessing here, but the append_with_names()could be something like this:

// Inside the library code (not shown):

pub fn append_with_names(&mut self, names: &[String]) -> Result<(Values, usize), Error> {

if names.len() > MAX_FEATURES { // ← Size check here

return Err(Error::TooManyFeatures);

}

// ... append logic

}Then again, we may not have to know what's in the function but just that it may return Err or None. In other words, ask the question, "what does this function input to the code that calls it?." We can take an (perhaps overkill) approach and mock the append_with_names() function just like we did with the input() and print() in our Hello World article. This lets us take total control and test the possible scenarios without having to make the scenarios real. If it's easier than making it real then there is less viscosity to write a test (e.g. easier than creating a config file that is too big and adding that to your test cases, e.g. easier that actually running out of memory or having a storage device go full).

use std::collections::HashMap;

// ============================================

// PART 1: Result-based API (returns Err)

// ============================================

#[derive(Debug, Clone)]

pub struct Features {

pub checksum: u64,

data: Vec<String>,

}

#[derive(Debug, Clone)]

pub struct Config {

pub feature_names: Vec<String>,

pub checksum: u64,

}

// This trait returns Result (can return Err)

pub trait FeatureStore {

fn append_with_names(&mut self, names: &[String]) -> Result<(Vec<String>, usize), String>;

}

// Real implementation

pub struct RealFeatureStore {

max_features: usize,

}

impl RealFeatureStore {

pub fn new(max_features: usize) -> Self {

Self { max_features }

}

}

impl FeatureStore for RealFeatureStore {

fn append_with_names(&mut self, names: &[String]) -> Result<(Vec<String>, usize), String> {

// This is where the size limit check happens (like in Cloudflare's code)

if names.len() > self.max_features {

return Err(format!(

"Too many features: {} exceeds limit of {}",

names.len(),

self.max_features

));

}

Ok((names.to_vec(), names.len()))

}

}

// UNSAFE version - mirrors Cloudflare's code (line 82)

pub fn fetch_features_unsafe<T: FeatureStore>(

features: &mut T,

config: &Config,

) -> (Vec<String>, usize) {

// THIS IS EXACTLY LIKE CLOUDFLARE'S CODE

let (feature_values, count) = features

.append_with_names(&config.feature_names)

.unwrap(); // ← PANICS on Err!

println!("Successfully fetched {} features", count);

(feature_values, count)

}

// SAFE version - proper error handling

pub fn fetch_features_safe<T: FeatureStore>(

features: &mut T,

config: &Config,

) -> Result<(Vec<String>, usize), String> {

match features.append_with_names(&config.feature_names) {

Ok((feature_values, count)) => {

println!("Successfully fetched {} features", count);

Ok((feature_values, count))

}

Err(e) => {

eprintln!("ERROR: Failed to fetch features: {}", e);

eprintln!("Using default features instead");

Err(e)

}

}

}

// ============================================

// PART 2: Option-based API (returns None)

// ============================================

pub trait ConfigProvider {

fn get_setting(&self, key: &str) -> Option<String>;

fn get_timeout(&self, key: &str) -> Option<u32>;

}

pub struct RealConfigProvider {

settings: HashMap<String, String>,

}

impl RealConfigProvider {

pub fn new() -> Self {

let mut settings = HashMap::new();

settings.insert("timeout".to_string(), "30".to_string());

settings.insert("max_retries".to_string(), "3".to_string());

Self { settings }

}

}

impl ConfigProvider for RealConfigProvider {

fn get_setting(&self, key: &str) -> Option<String> {

self.settings.get(key).cloned()

}

fn get_timeout(&self, key: &str) -> Option<u32> {

self.settings.get(key).and_then(|v| v.parse().ok())

}

}

// UNSAFE: Chained calls with unwrap on Option

pub fn get_critical_setting_unsafe<T: ConfigProvider>(

provider: &T,

key: &str,

) -> String {

let setting = provider.get_setting(key).unwrap(); // ← PANICS if None!

println!("Got setting: {}", setting);

setting

}

pub fn get_timeout_value_unsafe<T: ConfigProvider>(

provider: &T,

) -> u32 {

let timeout = provider.get_timeout("timeout").unwrap(); // ← PANICS if None!

println!("Timeout: {}s", timeout);

timeout

}

// SAFE: Proper handling of Option

pub fn get_critical_setting_safe<T: ConfigProvider>(

provider: &T,

key: &str,

) -> Result<String, String> {

match provider.get_setting(key) {

Some(setting) => {

println!("Got setting: {}", setting);

Ok(setting)

}

None => {

eprintln!("ERROR: Setting '{}' not found", key);

Err(format!("Setting '{}' not configured", key))

}

}

}

pub fn get_timeout_value_safe<T: ConfigProvider>(

provider: &T,

) -> Result<u32, String> {

match provider.get_timeout("timeout") {

Some(timeout) => {

println!("Timeout: {}s", timeout);

Ok(timeout)

}

None => {

eprintln!("ERROR: Timeout not configured, using default");

Ok(30) // Use default value

}

}

}

// Complex chained example with multiple Options

pub fn complex_chain_unsafe<T: ConfigProvider>(provider: &T) -> String {

// Multiple chained operations, any could return None

let max_retries = provider.get_timeout("max_retries").unwrap(); // Could panic

let timeout = provider.get_timeout("timeout").unwrap(); // Could panic

let endpoint = provider.get_setting("endpoint").unwrap(); // Could panic

format!("Endpoint: {}, Timeout: {}s, Retries: {}", endpoint, timeout, max_retries)

}

// ============================================

// TESTS - Both Result and Option examples

// ============================================

#[cfg(test)]

mod tests {

use super::*;

use mockall::mock;

// ============================================

// Mock for FeatureStore (Result-based)

// ============================================

mock! {

pub FeatureStore {}

impl FeatureStore for FeatureStore {

fn append_with_names(&mut self, names: &[String]) -> Result<(Vec<String>, usize), String>;

}

}

// ============================================

// Mock for ConfigProvider (Option-based)

// ============================================

mock! {

pub ConfigProvider {}

impl ConfigProvider for ConfigProvider {

fn get_setting(&self, key: &str) -> Option<String>;

fn get_timeout(&self, key: &str) -> Option<u32>;

}

}

// ============================================

// TESTS FOR RESULT (FeatureStore)

// ============================================

#[test]

fn test_result_unsafe_with_ok() {

let mut mock = MockFeatureStore::new();

let test_features = vec!["feature1".to_string(), "feature2".to_string()];

// Mock returns Ok - unwrap succeeds

mock.expect_append_with_names()

.withf(|names| names.len() == 2)

.times(1)

.returning(|names| Ok((names.to_vec(), names.len())));

let config = Config {

feature_names: test_features.clone(),

checksum: 0,

};

let (values, count) = fetch_features_unsafe(&mut mock, &config);

assert_eq!(count, 2);

assert_eq!(values, test_features);

}

#[test]

#[should_panic(expected = "called `Result::unwrap()` on an `Err` value")]

fn test_result_unsafe_with_err_panics() {

let mut mock = MockFeatureStore::new();

let too_many_features = vec![

"feature1".to_string(),

"feature2".to_string(),

"feature3".to_string(),

"feature4".to_string(),

"feature5".to_string(),

];

// Mock returns Err (simulating size limit exceeded)

mock.expect_append_with_names()

.times(1)

.returning(|names| {

Err(format!("Too many features: {} exceeds limit of 3", names.len()))

});

let config = Config {

feature_names: too_many_features,

checksum: 0,

};

// This PANICS because mock returns Err and we call unwrap()!

fetch_features_unsafe(&mut mock, &config);

}

#[test]

fn test_result_safe_with_err_handles_gracefully() {

let mut mock = MockFeatureStore::new();

let too_many_features = vec![

"feature1".to_string(),

"feature2".to_string(),

"feature3".to_string(),

"feature4".to_string(),

"feature5".to_string(),

];

// Same mock returning Err

mock.expect_append_with_names()

.times(1)

.returning(|names| {

Err(format!("Too many features: {} exceeds limit of 3", names.len()))

});

let config = Config {

feature_names: too_many_features,

checksum: 0,

};

// No panic! Returns Err instead

let result = fetch_features_safe(&mut mock, &config);

assert!(result.is_err());

assert!(result.unwrap_err().contains("Too many features"));

}

#[test]

#[should_panic]

fn test_result_real_store_with_too_many_features_panics() {

let mut store = RealFeatureStore::new(3);

let config = Config {

feature_names: vec![

"f1".to_string(),

"f2".to_string(),

"f3".to_string(),

"f4".to_string(),

],

checksum: 0,

};

fetch_features_unsafe(&mut store, &config);

}

#[test]

fn test_result_real_store_safe() {

let mut store = RealFeatureStore::new(3);

let config = Config {

feature_names: vec![

"f1".to_string(),

"f2".to_string(),

"f3".to_string(),

"f4".to_string(),

],

checksum: 0,

};

let result = fetch_features_safe(&mut store, &config);

assert!(result.is_err());

}

// ============================================

// TESTS FOR OPTION (ConfigProvider)

// ============================================

#[test]

fn test_option_unsafe_with_some() {

let mut mock = MockConfigProvider::new();

// Mock returns Some - unwrap succeeds

mock.expect_get_setting()

.with(mockall::predicate::eq("database_url"))

.times(1)

.returning(|_| Some("postgres://localhost".to_string()));

let result = get_critical_setting_unsafe(&mock, "database_url");

assert_eq!(result, "postgres://localhost");

}

#[test]

#[should_panic(expected = "called `Option::unwrap()` on a `None` value")]

fn test_option_unsafe_with_none_panics() {

let mut mock = MockConfigProvider::new();

// Mock returns None - unwrap panics!

mock.expect_get_setting()

.with(mockall::predicate::eq("missing_key"))

.times(1)

.returning(|_| None); // ← Returns None

// This PANICS because mock returns None!

get_critical_setting_unsafe(&mock, "missing_key");

}

#[test]

fn test_option_safe_with_none_handles_gracefully() {

let mut mock = MockConfigProvider::new();

// Mock returns None

mock.expect_get_setting()

.with(mockall::predicate::eq("missing_key"))

.times(1)

.returning(|_| None);

// No panic! Returns Err instead

let result = get_critical_setting_safe(&mock, "missing_key");

assert!(result.is_err());

assert!(result.unwrap_err().contains("not configured"));

}

#[test]

#[should_panic(expected = "called `Option::unwrap()` on a `None` value")]

fn test_option_timeout_unsafe_panics() {

let mut mock = MockConfigProvider::new();

// Mock returns None for timeout

mock.expect_get_timeout()

.with(mockall::predicate::eq("timeout"))

.times(1)

.returning(|_| None);

get_timeout_value_unsafe(&mock);

}

#[test]

fn test_option_timeout_safe_uses_default() {

let mut mock = MockConfigProvider::new();

// Mock returns None

mock.expect_get_timeout()

.with(mockall::predicate::eq("timeout"))

.times(1)

.returning(|_| None);

// Safe version uses default!

let result = get_timeout_value_safe(&mock);

assert_eq!(result.unwrap(), 30);

}

#[test]

#[should_panic(expected = "called `Option::unwrap()` on a `None` value")]

fn test_option_complex_chain_panics_at_first_none() {

let mut mock = MockConfigProvider::new();

// First call returns None - panics immediately

mock.expect_get_timeout()

.with(mockall::predicate::eq("max_retries"))

.times(1)

.returning(|_| None);

complex_chain_unsafe(&mock);

}

#[test]

#[should_panic(expected = "called `Option::unwrap()` on a `None` value")]

fn test_option_complex_chain_panics_at_second_none() {

let mut mock = MockConfigProvider::new();

// First succeeds

mock.expect_get_timeout()

.with(mockall::predicate::eq("max_retries"))

.times(1)

.returning(|_| Some(3));

// Second returns None - panics here

mock.expect_get_timeout()

.with(mockall::predicate::eq("timeout"))

.times(1)

.returning(|_| None);

complex_chain_unsafe(&mock);

}

#[test]

#[should_panic(expected = "called `Option::unwrap()` on a `None` value")]

fn test_option_complex_chain_panics_at_third_none() {

let mut mock = MockConfigProvider::new();

// First two succeed

mock.expect_get_timeout()

.with(mockall::predicate::eq("max_retries"))

.times(1)

.returning(|_| Some(3));

mock.expect_get_timeout()

.with(mockall::predicate::eq("timeout"))

.times(1)

.returning(|_| Some(30));

// Third returns None - panics here

mock.expect_get_setting()

.with(mockall::predicate::eq("endpoint"))

.times(1)

.returning(|_| None);

complex_chain_unsafe(&mock);

}

#[test]

fn test_option_real_provider_with_existing_key() {

let provider = RealConfigProvider::new();

let result = get_critical_setting_unsafe(&provider, "timeout");

assert_eq!(result, "30");

}

#[test]

#[should_panic(expected = "called `Option::unwrap()` on a `None` value")]

fn test_option_real_provider_with_missing_key_panics() {

let provider = RealConfigProvider::new();

get_critical_setting_unsafe(&provider, "nonexistent_key");

}

#[test]

fn test_option_real_provider_safe_with_missing_key() {

let provider = RealConfigProvider::new();

let result = get_critical_setting_safe(&provider, "nonexistent_key");

assert!(result.is_err());

}

}And when we run it:

% cargo test

Compiling cloudflare_style_demo)

Finished `test` profile [unoptimized + debuginfo] target(s) in 0.21s

Running unittests src/lib.rs (target/debug/deps/cloudflare_style_demo-116bd575eecbca68)

running 16 tests

test tests::test_option_real_provider_with_existing_key ... ok

test tests::test_option_real_provider_safe_with_missing_key ... ok

test tests::test_option_complex_chain_panics_at_second_none - should panic ... ok

test tests::test_option_safe_with_none_handles_gracefully ... ok

test tests::test_option_complex_chain_panics_at_first_none - should panic ... ok

test tests::test_option_real_provider_with_missing_key_panics - should panic ... ok

test tests::test_option_complex_chain_panics_at_third_none - should panic ... ok

test tests::test_option_timeout_safe_uses_default ... ok

test tests::test_option_timeout_unsafe_panics - should panic ... ok

test tests::test_option_unsafe_with_some ... ok

test tests::test_option_unsafe_with_none_panics - should panic ... ok

test tests::test_result_real_store_safe ... ok

test tests::test_result_real_store_with_too_many_features_panics - should panic ... ok

test tests::test_result_safe_with_err_handles_gracefully ... ok

test tests::test_result_unsafe_with_ok ... ok

test tests::test_result_unsafe_with_err_panics - should panic ... ok

test result: ok. 16 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Doc-tests cloudflare_style_demo

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

cloudflare_style_demo %

Here we can induce the Err and None without actually having to create a too large config file that has too many entries let's say. So, similar to the Hello World example, we take total control over the environment and we can scalably and programmatically induce errors or any conditions in which the code is supposed to behave gracefully and then assert that the correct things are done and the incorrect things are not done or don't happen.

Quite a lot of code but it is similar to what we saw with testing the hello world program with full error handling, logging and test cases. Quite a large ratio when comparing test code, to error handling code to business logic.

Perhaps these types of techniques may have helped detect and prevent this crash ahead of time.